Archive for Uncategorized

The Future of Search in 2023: Google Goes Multi-Modal

Posted by: | CommentsThe Future of Search in 2023: Google Goes Multi-Modal

In recent months, Google has been slowly acclimating the public to a new way of thinking about search that is likely to be a hallmark of our future interactions with the platform.

Searching the internet has been, since its inception, a text-based activity, based on the concept of locating the best match between the intent of the searcher and a set of results displayed in the form of text links and content snippets.

But in this emerging phase, search is becoming increasingly multi-modal — able, in other words, to handle input and output in various formats, including text, images, and sound. At its best, multimodal search is more intuitive and convenient than traditional methods.

At least some of the impetus for Google’s move toward thinking of search as a multi-modal activity comes from the rise of social media platforms like Instagram, Snapchat, and TikTok, all of which have evolved user expectations in the direction of highly visual and immediate interaction with content. As a veteran internet company, Google has moved to keep pace with these changing expectations.

The Emergence of Multisearch

Representing the next evolution of tools like Google Images, the company has focused immense development resources into Google Lens, Vision AI, and other components of its sophisticated image recognition technology.

Google Lens is fairly well established as a search tool that lets you quickly translate road signs and menus, research products, identify plants, or look up recipes simply by pointing your phone’s camera at the object you want to search for.

This year, Google introduced the concept of “multisearch,” which allows users to add text qualifiers to image searches in Lens. You can now take a photo of a blue dress and ask Google to look for it in green, or add “near me” to see local restaurants that offer dishes matching an image.

The Image Icon Joins the Voice Icon

In a further step toward nudging the public toward image-based search, Google also recently added an image icon to the main search box at google.com.

The image icon takes its place alongside the microphone, Google’s prompt to search by voice. In the early days of Amazon Alexa and its ilk, voice search was supposed to take over the internet. That didn’t quite happen, but voice search has since grown to occupy a useful niche in our arsenal of methods for interacting with devices, convenient when talking is faster or safer than typing. So too, hearing Google Assistant or Alexa read search results out loud will sometimes be preferable to reading text on a screen.

This brings us to the vision of a multi-modal search interface: users should be able to search by, with, and for any medium that is the most useful and convenient for the given circumstance.

A voice prompt to “show me pictures of unicorns” might work best for a child still learning to read; an image-based input potentially conveys more information than any short text phrase regarding the color, texture, and detailed features of a retail product. It’s safe to assume that any combination of text, voice, and image will soon be supported for both inputs and outputs.

Marketing in the World of Multi-modal Search

What does all of this mean for marketers? Those with goals to increase exposure of businesses and their offerings online will do well to focus their attention on two priorities.

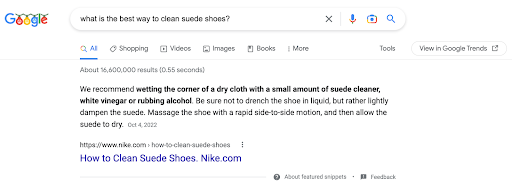

The first is to provide content for consumption in search that is not just promotional but also useful. With consumers being trained to ask questions of all kinds and receive responses that help them stay informed and make better decisions, marketers need to compete to provide answers and advice, in addition to promoting the availability of their products or services. Google uses Featured Snippets, for example — the answers showcased at the top of search results — as content to be read aloud by Google Assistant when users ask questions, offering a great opportunity to increase brand exposure and to be recognized as an authoritative industry voice.

Image Optimization is Key

The other major priority for marketers in the age of multi-modal search is image optimization. Google’s Vision AI technology provides the company with an automated means of understanding the content of pictures. With its image recognition technology — an important facet of Google’s Knowledge Graph, which creates linkages between entities as a way of understanding internet content — the company is transforming search results for local and product searches into immersive, image-first experiences, matching featured images to search intent.

Marketers who publish engaging photo content in strategic places will stand to win out in Google’s image-rich search results. In particular, e-commerce websites and store landing pages, Google Business Profiles, and product listings uploaded to Google’s Merchant Center should showcase photos that correspond to search terms a company hopes to rank for. Photos should be augmented with descriptive text, but Google can interpret and display photos that match a searcher’s query even without text descriptions.

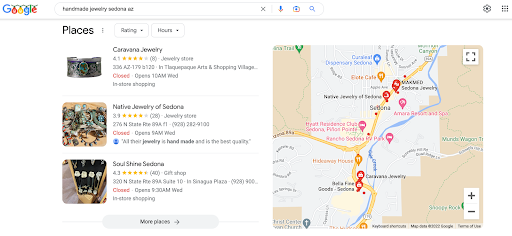

A search for “handmade jewelry in Sedona, Arizona,” for example, returns Google Business Profiles in the result, each of which displays a photo pulled from the profile’s image gallery that corresponds to what the user was searching for.

Rising Up in Search

The new shopping experience in search, announced by Google this fall, can be invoked by typing “shop” at the beginning of any query for a product. The results are dominated by images from retail websites, matched precisely to the search query entered by the user.

Food and retail are on the cutting edge of multi-modal search. In these categories, marketers already need to be actively working on image optimization and content marketing with various media use cases in mind. For other business categories, multi-modal search is coming.

Wherever it’s more convenient to use pictures in place of text or voice in place of visual display, Google will want to make these options available across all business categories. It’s best to get ready now for the multi-modal future.

About the Author

With over a decade of local search experience, Damian Rollison, SOCi’s Director of Market Insights, has focused his career on discovering innovative ways to help businesses large and small get noticed online. Damian’s columns appear frequently at Street Fight, Search Engine Land, and other publications, and he is a frequent speaker at industry conferences such as Localogy, Brand Innovators, State of Search, SMX, and more.

via The Future of Search in 2023: Google Goes Multi-Modal

The Future of Search in 2023: Google Goes Multi-Modal

Posted by: | CommentsThe Future of Search in 2023: Google Goes Multi-Modal

The Future of Search in 2023: Google Goes Multi-Modal

In recent months, Google has been slowly acclimating the public to a new way of thinking about search that is likely to be a hallmark of our future interactions with the platform.

Searching the internet has been, since its inception, a text-based activity, based on the concept of locating the best match between the intent of the searcher and a set of results displayed in the form of text links and content snippets.

But in this emerging phase, search is becoming increasingly multi-modal — able, in other words, to handle input and output in various formats, including text, images, and sound. At its best, multimodal search is more intuitive and convenient than traditional methods.

At least some of the impetus for Google’s move toward thinking of search as a multi-modal activity comes from the rise of social media platforms like Instagram, Snapchat, and TikTok, all of which have evolved user expectations in the direction of highly visual and immediate interaction with content. As a veteran internet company, Google has moved to keep pace with these changing expectations.

The Emergence of Multisearch

Representing the next evolution of tools like Google Images, the company has focused immense development resources into Google Lens, Vision AI, and other components of its sophisticated image recognition technology.

Google Lens is fairly well established as a search tool that lets you quickly translate road signs and menus, research products, identify plants, or look up recipes simply by pointing your phone’s camera at the object you want to search for.

This year, Google introduced the concept of “multisearch,” which allows users to add text qualifiers to image searches in Lens. You can now take a photo of a blue dress and ask Google to look for it in green, or add “near me” to see local restaurants that offer dishes matching an image.

The Image Icon Joins the Voice Icon

In a further step toward nudging the public toward image-based search, Google also recently added an image icon to the main search box at google.com.

The image icon takes its place alongside the microphone, Google’s prompt to search by voice. In the early days of Amazon Alexa and its ilk, voice search was supposed to take over the internet. That didn’t quite happen, but voice search has since grown to occupy a useful niche in our arsenal of methods for interacting with devices, convenient when talking is faster or safer than typing. So too, hearing Google Assistant or Alexa read search results out loud will sometimes be preferable to reading text on a screen.

This brings us to the vision of a multi-modal search interface: users should be able to search by, with, and for any medium that is the most useful and convenient for the given circumstance.

A voice prompt to “show me pictures of unicorns” might work best for a child still learning to read; an image-based input potentially conveys more information than any short text phrase regarding the color, texture, and detailed features of a retail product. It’s safe to assume that any combination of text, voice, and image will soon be supported for both inputs and outputs.

Marketing in the World of Multi-modal Search

What does all of this mean for marketers? Those with goals to increase exposure of businesses and their offerings online will do well to focus their attention on two priorities.

The first is to provide content for consumption in search that is not just promotional but also useful. With consumers being trained to ask questions of all kinds and receive responses that help them stay informed and make better decisions, marketers need to compete to provide answers and advice, in addition to promoting the availability of their products or services. Google uses Featured Snippets, for example — the answers showcased at the top of search results — as content to be read aloud by Google Assistant when users ask questions, offering a great opportunity to increase brand exposure and to be recognized as an authoritative industry voice.

Image Optimization is Key

The other major priority for marketers in the age of multi-modal search is image optimization. Google’s Vision AI technology provides the company with an automated means of understanding the content of pictures. With its image recognition technology — an important facet of Google’s Knowledge Graph, which creates linkages between entities as a way of understanding internet content — the company is transforming search results for local and product searches into immersive, image-first experiences, matching featured images to search intent.

Marketers who publish engaging photo content in strategic places will stand to win out in Google’s image-rich search results. In particular, e-commerce websites and store landing pages, Google Business Profiles, and product listings uploaded to Google’s Merchant Center should showcase photos that correspond to search terms a company hopes to rank for. Photos should be augmented with descriptive text, but Google can interpret and display photos that match a searcher’s query even without text descriptions.

A search for “handmade jewelry in Sedona, Arizona,” for example, returns Google Business Profiles in the result, each of which displays a photo pulled from the profile’s image gallery that corresponds to what the user was searching for.

Rising Up in Search

The new shopping experience in search, announced by Google this fall, can be invoked by typing “shop” at the beginning of any query for a product. The results are dominated by images from retail websites, matched precisely to the search query entered by the user.

Food and retail are on the cutting edge of multi-modal search. In these categories, marketers already need to be actively working on image optimization and content marketing with various media use cases in mind. For other business categories, multi-modal search is coming.

Wherever it’s more convenient to use pictures in place of text or voice in place of visual display, Google will want to make these options available across all business categories. It’s best to get ready now for the multi-modal future.

via The Future of Search in 2023: Google Goes Multi-Modal

8 Ways to Optimize Your Facebook Page

Posted by: | Comments8 Ways to Optimize Your Facebook Page

8 Ways to Optimize Your Facebook Page

Facebook is reported to have over 2.9 billion monthly active users. Obviously, it’s the marketing channel no business can ignore.

Yet, too many small businesses do not understand how to utilize the platform properly: a study of over 323 companies globally found that most businesses find Facebook marketing increasingly competitive.

The problem is that there’s no simple answer as to how to translate Facebook likes into ROI. It all comes down to months (and years) of building up your social media presence using a solid strategy.

But before you start working on that strategy and start investing in ads, there’s some groundwork to be done.

You need to optimize your Facebook page to translate those efforts into actual clicks and conversions. You need to set up your page to help your new followers quickly understand what your business is about and how it can help them.

Here are eight steps to walk you through the Facebook page optimization process.

1. Work on Your Page Details, a Checklist

1. Add a profile picture

It’s much more important than your cover image because it will accompany every page update, even when it’s shared to someone else’s timeline.

- The profile picture should be at least 180 x 180 pixels (feel free to go larger) and square.

- The profile picture should be readable when viewed at 36 x 36 pixels (which is what it looks like when appearing in the mobile newsfeed).

Make sure it has your visual branding, including your logo and color palette. If you don’t have a logo yet, here’s a tool to create one for free.

2. Add a cover photo

A cover photo is a nice way to make your page more eye-catching.

- The cover photo displays 820 x 312 pixels on desktop and 640 x 360 pixels on mobile phones. It must be at least 400 x 150 pixels but, again, feel free to go larger (high-quality) than that.

- Try changing your cover photo seasonally or when your business has important news.

3. Enable a custom URL or “username”

A username helps people find and remember your page. When you create a username, it appears in a customized web address (for example, facebook.com/yourcompany) for your page which makes it easy for people to type in the URL.

It also helps a page rank higher for that username. Your username should match the name of your page as much as possible

4. Make the most of your page description

Always complete your “Description” field, making sure you use all the allowed 255 characters for original content.

Original content helps your page rank higher. It is a good idea to run your brand name through a semantic analysis tool to come up with the most relevant context to craft your description. If your brand name is new, try your competitor’s one. Text Optimizer is a quick way to do that:

5. Add your website

This is self-explanatory; we always want Facebook users to get to your site easily.

Bonus tip: Link to your page from your website, too. This will get that page rank higher for your business name, helping you in your reputation management efforts. (Here’s a great collection of icons to choose from.)

Apart from the major components listed above, there is more information you can (and should) add:

- Your business contact details (phone number and email).

- Business categories

- A brief list of your products.

- Additional description

Let me elaborate on that last thing on the list in more detail. While the main description field will limit you to 255 characters, the “additional” section will let you tell more about your business. You can add up to 50,000 characters in this field, so get descriptive!

This is very useful because the more original text you have here, the more opportunities you get to rank your page for business-related search queries. You can use this field to:

- Add a short business FAQ.

- Describe your processes and services.

- Tell your founder’s story, etc.

Do make use of Text Optimizer as well to let the tool give you more ideas of what to put in the additional description field.

2. Select the Best Template

Facebook offers pre-made page templates to help you optimize your page for the types of the business you are running, including:

- Services

- Shopping (This one has the built-in Shopping tab which allows you to feature products on Facebook.)

- Business

- Venues

- Non-profits

- Politicians

- Restaurants and Cafés, and more.

The template simply dictates the layout of buttons and tabs that have been found most efficient for specific business types.

You can edit and customize them as much as you like after you pick one.

3. Select the Page CTA

Your page’s call-to-action is located below your cover photo. You can choose a CTA from quite a few currently supported, so look for one that’s most relevant to your business:

- Book services

- Get in touch

- Learn more

- Make a purchase or donation

- Watch the video, etc.

To customize your CTA button, click it and select “Edit Button.” There are plenty of CTA options you can choose from. Some examples of Facebook CTAs include:

- Book now

- Start order

- Call now

- Email now

For each CTA, you’ll need to add the most appropriate landing page where the user will perform the suggested action.

Feel free to experiment with different CTAs: Create a new one when you have a promotion, when you created a new video or a lead magnet, etc. Just don’t do that too often: You need some time to accumulate the data to compare the performance of different CTAs.

4. Add Page Tabs to Promote Your Products or Services

Tabs are sections of your page’s content that appear below its name.

You can also customize which tabs you want to show up on your page. If you enable a tab, make sure to fill it in with content. For example, don’t enable “Videos” tab unless you have videos to share there:

For example, you can opt to add the following buttons:

- Offers, to highlight your current offers

- Shop, to list the products you want to feature

- Services, to highlight your services

To change the tabs, go to “Page settings,” then to “Template and Tabs” and scroll to the bottom of the page to view the list of current tabs and the option to add a tab.

5. Enable Reviews

Nearly all potential customers consult online ratings and reviews before making a purchase. I am one of those customers, and I’ve noticed that Facebook reviews tend to be more positive than those on other platforms.

This may be due to the fact that Facebook is a very personal platform—people don’t go there first to vent their frustration at companies. On the contrary, they are there to talk to family and friends.

That being said, Facebook is perfect for publicizing your customers’ reviews. To do that, go to “Settings,” and click “Edit” next to “Review” to allow visitors to review your page. If things go wrong (and that may happen to the best of us), you’ll be able to switch those reviews back off going forward.

6. Update and Engage

No optimization tactic can save your Facebook page unless you update it often and engage with your audience. There are a few audience-building hacks you can try:

- Ask questions to engage your followers in discussions.

- Post curated content

- Upload short, well-annotated videos

- Stream live videos from (virtual) events

- Tag other business pages (industry tools and non-profits)

- Upload images (Collages, quotes, team photos, etc.)

You can use visuals to further market your business, without being too “salesy” or intrusive. There are quite a few tools allowing you to create unique, well-branded images without having to download expensive software. You can create visuals in several sizes at a time and brand those images using your logo. Bulk editing is a lifesaver!

Furthermore, you need to reply to your customers within minutes on Facebook, even if it’s just a polite boilerplate message to let them know you are on it. Your Facebook followers will see how fast you tend to reply on Facebook.

Make sure to use Facebook Insights to measure your (and your team’s) performance. An even better idea is to use one of the all-in-one Social Media Analytics tools that can send regular reports and include your other (as well as your competitors’) social media channels as well.

Speaking of competitors…

7. Research Your Competitors’ Facebook Strategy

You can get great insight into how your competitors are growing their Facebook presence by using these two tools.

via 8 Ways to Optimize Your Facebook Page

20+ Social Proof Examples of (Real) Conversion Boosting Tactics

Posted by: | Comments20+ Social Proof Examples of (Real) Conversion Boosting Tactics

Social proof examples are everywhere. You’ve experienced it in your own life to some degree:

- Asking for local dining recommendations (“Who makes good sandwiches in that neighborhood?”)

- Inquiring about travel experiences (“How was your hostel stay in Belgium?”)

- Checking Yelp reviews before trying a new business (“How’s the customer service?”)

Social proof is highly effective at stirring up interest and influencing decisions.

Your marketing strategy can leverage social proof to attract customers and boost conversions. We’ll explain what social proof is, how and why it works, and how you can use it in your campaigns.

We’ll also look at real-world examples of how other brands use social proof marketing.

What is Social Proof?

Dr. Robert Cialdini coined the phrase “social proof” in his 1984 book Influence: The Psychology of Persuasion.

It’s the principle that in uncertain situations, people watch and mirror the behavior of those around them.

They conform because they subconsciously believe others know more about the situation. Thus they must know the right thing to do or say.

This is why you joined that long line at the taco truck. And why you bought that pullup bar on Amazon with the 2,480 reviews and 4.6-star rating. And why you finally started watching Stranger Things.

Everyone else was doing it.

Social proof marketing tactics leverage your existing customers to attract new ones.

20+ Examples of (Effective) Social Proof

Here are 22 social proof examples you can use and how other brands currently employ them in real life.

1. Celebrity Endorsements

In 2017, 57% of people aged 16-64 reported discovering brands through celebrity endorsements. In 2021, 59% of Americans over 18 admitted that a celebrity endorsement influenced their purchasing decision.

Celebrity social proof, both organic and paid endorsement, drastically increases brand awareness because they have millions of followers.

Nike, for example, made an endorsement deal with Michael Jordan in 1984 that now earns the company $3M in sales every 5 hours!

2. Expert Endorsements

Cialdini told Harvard Business Review:

“When people are uncertain … they look outside for sources of information that can reduce their uncertainty. The first thing they look to is authority: What do the experts think about this topic?”

An endorsement from an industry expert lends credibility to your brand and helps create brand trust.

Sensodyne’s website and YouTube page feature videos of real dentists explaining how Sensodyne works and endorsing it.

3. User-Generated Content

User-generated content (UGC) is any content your customers create. This includes photos, videos, audio, reviews, ratings, comments, or blog posts.

UGC is honest, organic content that increases brand awareness via @mentions or hashtags. Ask your social media followers for permission to use this content in your marketing.

Zapier’s carousel of satisfied customer tweets on its website’s homepage is a good example of this tactic.

4. Influencer Marketing

Influencers consistently create and publish popular web content. They also regularly engage with their social media followers.

They’ve developed a brand around their passion and built a robust, faithful community that shares that passion.

Their community trusts them and relates to them more than celebrities that seem far removed from average life. So they trust their recommendations far more.

Reach out to these “mini-celebrities” to create a paid partnership. BetterHelp has one with YouTubers Super Carlin Brothers, who promote the service in many of their videos.

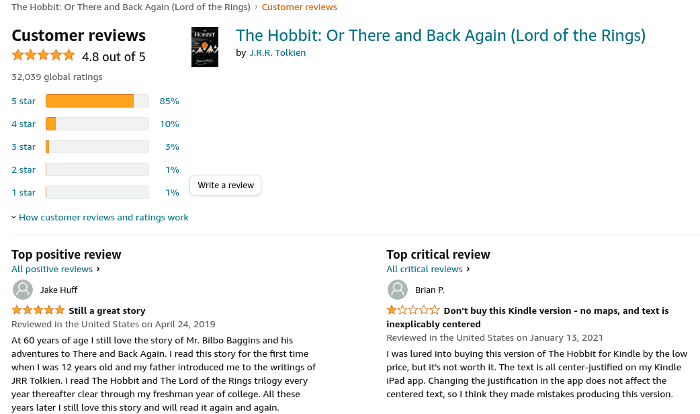

5. Customer Reviews & Ratings

People regularly check customer reviews and ratings before trying anything new. They want to know how others feel about particular products and services.

In one 2021 survey, nearly 70% of online shoppers checked between one and six online reviews before making a purchase.

Create a listing on an online review site like Yelp or Google Business Profile. You can also put a reviews section on your website and Facebook page. Draw attention to high ratings and to when you get a positive review.

Amazon’s customer review section is a great model for creating your own.

6. Testimonials

A customer testimonial is slightly different from a customer review.

Customers can leave positive or negative reviews at will, but you must request a testimonial from a satisfied customer. As such, testimonials tend to be very positive.

Also, testimonials hold more sway by their quality rather than by vast quantities like reviews.

A good testimonial should include details and a customer photo to add credibility. Or, even better, you can get a video testimonial.

BuzzSumo devotes a section of its website explicitly to testimonials.

7. Media Coverage Archives

Like expert social proof, media mentions can increase brand trust. Scoring coverage in a well-established, reputable publication powerfully shapes your brand’s reputation.

Mention and link to any earned media on your social platforms and other marketing channels.

You can create a media archive on your website if you earn multiple media mentions. Check out FlexJobs for a great example of this tactic.

8. Social Share Count

Social share count is the number of times your URL has been shared on social media channels.

This is invaluable analytics data because it reveals the level of interest generated. It also builds brand trust and increases brand awareness by encouraging website visitors to share your content.

Use an analytics tool or social share counter to see your social share count as a whole or for each platform.

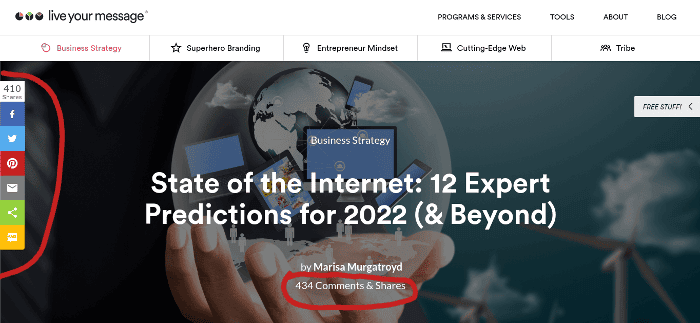

Live Your Message uses ShareThis to stick the share count and share buttons to the side of each blog post. The brand also displays the total number of comments and shares under each post’s headline.

9. Certifications

Certifications showcase your expertise in particular areas. Highlighting them in your marketing strategy establishes you as an industry expert and fosters a sense of trust among potential customers.

The tax professionals at Block Advisors by H&R Block are all Small Business Certified. So the certification badge appears on each one’s photo throughout the site.

The bottom of the website bears the TRUSTe Certified Privacy badge. This indicates that customers can trust Block Advisors with their personal information.

10. Rankings

Consumers search for and compare top options when deciding what to buy. Hence all the list posts across the internet that rank everything from vacation destinations to energy drinks.

Emphasizing your high rankings shows potential customers where you stand against the competition. You can display this info on all your marketing channels and materials.

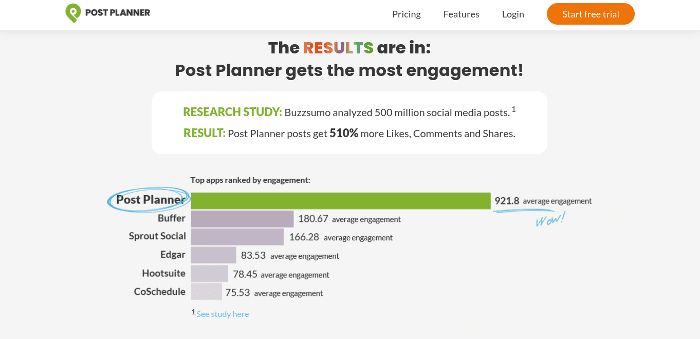

Post Planner doesn’t just say it ranked ahead of the competition. It proves it with a graph and by shouting out the business that did the research, adding extra credibility.

11. In-Line Notifications

In-line notifications are small pop-ups at the bottom of your screen when you visit some websites that notify you of other users’ activity on the site.

They let you know when another user has added an item to their cart, made a purchase, or signed up for something. They may show the actions of many people or one specific individual.

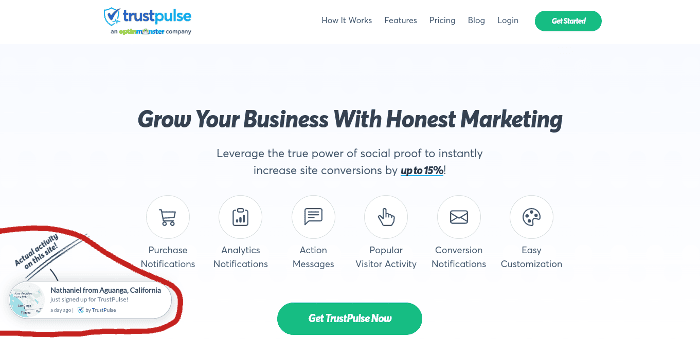

Notifications can draw attention to a product, warn that an item is selling out, or encourage a purchase. TrustPulse employs this user social proof to inform website visitors of new signups.

12. The Wisdom of Crowds

“The Wisdom of Crowds” is a phrase coined by James Surowiecki in his 2005 book. His idea is that a large group of people are better than experts at solving problems and making decisions.

In marketing, it refers to the social influence a large group of people can have on an individual observing that group.

Brands tap into this idea by publishing their large numbers of followers, customers, or subscribers. For instance, Linktree invites website visitors to join its 25M+ users in its Call to Action.

13. Live Conversion Updates

Live conversion updates display increases or decreases in conversions in real time. They show changes in site traffic, reader count, video views, likes, dislikes, shares, or subscribers.

This data reveals both your most and least popular content. It also indicates popular and unpopular days and times of day for website traffic.

Depending on the site plugin, you can display live conversion updates as an ever-changing number, a moving meter, or a live chart.

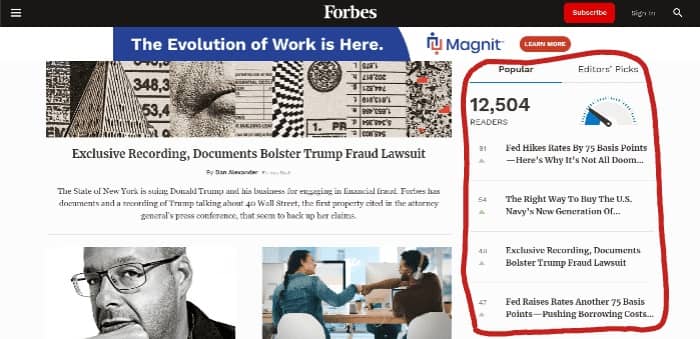

Forbes uses this user social proof tactic on its homepage to show readership numbers and an ever-fresh list of trending articles.

14. Customer Logos

Brand logos are readily recognizable and draw attention. Displaying your clients’ logos reveals your clients’ specific industry and size.

The recognizable symbols also foster a greater sense of trust.

Reach out to your clients for approval before posting their logos on your site. Otherwise, your usage of them for marketing purposes could constitute trademark infringement.

Check out Quuu Promote’s collage of client logos on its homepage, which creatively displays them twice.

15. Case Studies

A case study is a detailed account of how a brand solved its client’s dilemma.

It introduces the client, explains their former problem, reveals how the client discovered and connected with the brand, details how the problem was solved and highlights how the client benefitted.

To paint a complete picture, you’ll need to compile detailed data on your client and interview them. You’ll also need timeline analytics to show cause and effect.

Zendesk devotes a section of its website to its collection of case studies. You can filter them by Industry, Business Challenge, and Region.

16. The ”About Us” Page

Your “About Us” page gives website visitors a snapshot of who you are as a brand. This page can foster trust, reliability, and relatability, depending on what you include.

You can even incorporate internal to other parts of your website or link to subsidiary sites.

Bark’s “About Us” page keeps it short and snappy but oozes personality. Reading this page gives you a perfect sense of what this brand is all about. It even slips in a few social proofs.

17. Press Releases

A press release is an announcement sent to journalists about a brand’s newsworthy updates.

It includes a summary and all necessary contact info for journalists to reach out for details to help them build their story.

Press releases help foster a positive relationship with journalists reporting on your brand. Plus, displaying past press releases shares a timeline of your brand’s journey and accomplishments over the years.

The video game company Ubisoft catalogs all press releases in its press center. They are filterable by type and date.

18. Awards

Awards and accolades indicate that you have met specific criteria, reached a milestone, achieved a special status, or received acknowledgment.

Highlight your achievements and acknowledge the institution giving you the award. If metrics such as votes, ratings, or traffic won you the accolade, be sure to show appreciation.

Sendinblue highlights its awards on its homepage.

19. Affiliate Marketing

Affiliate marketers promote a brand’s products and services in their web content in exchange for a commission.

Start an affiliate program and reach out to influencers and existing customers to gauge interest in working with you.

Wellness Mama posts recipes for food, home remedies, and DIY health and beauty products on her blog. Her posts contain affiliate links to brands such as Amazon, ButcherBox, and Starwest Botanicals.

Note: The FTC requires an explicit statement when a post contains affiliate links to avoid potential deception. You are responsible for making a reasonable effort to notify and monitor your affiliates in this regard.

20. Referral Programs

Referral programs incentivize existing customers to promote your brand to friends and family.

People trust those closest to them the most for product and service recommendations. Referral programs leverage this fact by offering rewards for referrals.

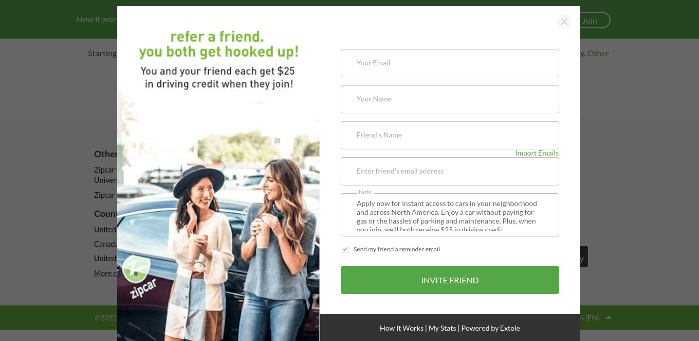

Typically, the new and existing customers both receive perks in this arrangement. In one such program, Zipcar gives each party a $25 driving credit if the friend signs up through a referral.

21. Instagram Photo Campaigns

Instagram photo campaigns can help achieve a specific marketing goal over a specific timeframe. You can promote a sale or new release, tease a big upcoming announcement, or attract new social media followers.

Use a series of related Reels, photos, or Stories to launch your campaign.

Last year, The Chosen ran a photo campaign promoting nightly watch parties for season one and the upcoming premier of season two.

22. ”Best Sellers” Pages

Drive Best Seller sales with a web page focusing exclusively on those items. This section indicates to site visitors that these products are hot commodities.

Qualify your claim by displaying such proof as reviews, ratings, rankings, and in-line notifications.

Patagonia promotes best-selling apparel as a shopping category and displays star ratings for each item.

Identifying What Tactics Work Best For Your Brand

Social proof tactics are not one-size-fits-all. You’ll need to determine which ones work best for what you offer.

Customer reviews and ratings are vital for driving conversions for luxury brands. They inspire confidence in customers who are considering such a large purchase.

If you provide high-end services, try promoting accolades, rankings, certificates, and expert endorsements. These assure potential customers that they’ll receive quality service from experienced, well-vetted professionals.

Referral programs and UGC generate brand loyalty for local and small business brands. Lifestyle brands leverage “About Me” pages and Instagram campaigns to create a vibe with their tribe.

Meanwhile, celebrity endorsements score massive conversion boosts for corporations selling everyday products like skincare or fast food.

Finally, nearly all products and services benefit from video-based social proof. 88% of consumers have bought a product or service after watching a brand’s video.

Quick Tips For Leveling Up Your Social Proof Game

Here are a few ways to improve the effectiveness of your social proof marketing:

- Place reviews, testimonials, and client logos on both the homepage and the checkout page: Include social proof on the checkout page to reinforce the purchase decision.

- Hand over your social media to a celebrity, influencer, or expert for the day: Build a positive relationship with your guest, expose your brand to a broader audience, and provide fresh content to your existing audience.

- Engage with your audience: Respond to as many comments, DMs, mentions, emails, and reviews as possible.

- Share business milestones: Create a sense of community by sharing victories and milestones. Publicly thank everyone who helped you reach those milestones.

- Encourage customer reviews: Request reviews in social media posts or purchase follow-up emails. Make it easy by adding a comment box and star rating directly in the email.

WARNING: Avoid These Social Proof Potholes

Conversely, you’ll want to avoid the following common social proof pitfalls:

- No customer reviews: A lack of reviews suggests that people either aren’t buying or aren’t enjoying your product or service. So encourage current customers to give reviews, and address any issues noted.

- Low view/conversion count: Never display negative social proof; this can break confidence and drive potential customers away. Improve your numbers first through contests, promotions, and hashtag campaigns.

- Improper use of testimonials: Avoid false advertising by getting testimonials from genuine customers. Ensure all testimonials honestly assess your brand.

- Endorsements outside your niche or misaligned with brand values/image: Only seek endorsements from trustworthy influencers, experts, or celebrities. They should also operate within your niche and match your brand image and values.

Leverage Social Proof Examples & Boost Conversion TODAY

Now that you’ve seen successful real-world social proof examples from other brands, it’s your turn.

Imagine your brand name as the subject of buzz:

- At the water cooler

- At the dinner table

- During phone/text/video conversations

- Across social media

- On podcasts

- In forums and blogs

via 20+ Social Proof Examples of (Real) Conversion Boosting Tactics

Digital Brand Compliance: A New Responsibility of Content Marketing

Posted by: | CommentsA New Responsibility of Content Marketing

Is every company now a media company?

Is every company now a media company?

Though the answer has yet to be written definitively, I know how most content marketers would respond.

Every successful company’s marketing strategy includes a functioning media management operation.

Put simply, today, you may not be a media company, but you definitely are starting to operate like one.

Even just a few decades ago, organizing and scaling digital content management was thought to be a challenge only for traditional media companies because they had that much content.

Take the case of CNN. In 1980, the upstart cable network introduced a disruptive idea – global news 24 hours a day. In the mid-1990s, the company became one of the first broadcasting media companies to strategically invest in a new thing called “digital asset management.” Its Archive Project aimed to digitally manage 200,000 hours of archival material gathered and plan for more than 40,000 hours of new footage arriving each year.

The business mandate was simple. As Gordon Castle, then CNN’s senior vice president of technology, put it:

We have to shift to a digital system where content will be accessible to all our broadcast and interactive services. This is a paradigm change that will affect broadcasting everywhere.

It most certainly did.

Think about this. In the 1990s, CNN was attempting to create a system for 40,000 hours of new footage each year. By 2022, the company says it maintains 264 million hours of video consumed each year. That’s an increase of 6,600 times.

And in 2022, it’s no longer CNN and other media companies that have recognized the need to wrangle, manage, and maintain a tsunami of digital marketing media assets. Product and service businesses all have the same challenge.

Brands now need a better way to wrangle, manage, and maintain a tsunami of digital marketing media assets, says @Robert Rose via @CMIContent.Click To Tweet

Digital content management challenges for businesses

Over the last few years, the rapidly evolving set of digital content management responsibilities in business began to fall into the laps of marketing practitioners. Among the challenges you face:

- Diverse digital content formats, including images, audio, video, and even interactive applications, provide complex and dynamic media experiences for audiences. Those digital media assets must be managed in a more rapid and integrated manner.

- Reuse and repurposing of others’ content in your business must comply with complex usage rights, copyright regulations, and licensing agreements.

- As more brands act like media companies, monitoring the quality of digital content assets on third-party channels has become critical. These assessments range from ensuring an image or video contains the appropriate colors in an image or video to complying with usage requirements and paying royalties based on consumption quantity.

Together, these challenges have given rise to an emerging market need for content operations management – the real-time capability to manage brand compliance across digital assets on multiple channels.

At CMI, I call it digital brand compliance. This emerging market uniquely combines three existing challenges:

- Changing nature of digital asset management (DAM)

- Evolved, next generation of digital asset rights management (DRM)

- Emerging space of monitoring brand’s content quality management (CQM)

Let’s look at each of these.

1. Digital asset management allows for dynamic engagement

In the earliest days of digital asset management, a system of record acted as a single source of truth. It stored what was OK to use and archived content for historical posterity. The CNN archive project is a perfect example of this.

These DAMs then were coupled with other systems of records, such as product information management (PIM). A PIM system records more technical product content, such as merchandise SKUs, labeling information, ingredients, and other basic product information.

The overriding goal of this dual system was to ensure the consistent availability of usable assets in a library used by managers when they need the content assets.

However, over the last few years, both DAM and PIM systems have evolved from simple archive repositories to web content management systems – systems of engagement. Now they directly serve live content to audiences.

Digital asset management systems have evolved from archive repositories to serving live #content to audiences, says @Robert Rose via @CMIContent.Click To Tweet

Both DAM and PIM systems require a core integration not just to manage the content but to present the content legally and in compliance and make it accessible to both internal teams and public audiences. This responsibility made the access rules to content more complex and gave rise to more dynamic and cloud-based digital rights management solutions.

2. Digital rights management eases complex compliance (somewhat)

As the digital world emerged in the early 2000s, the term “digital rights management” meant protecting and securing digital content from media companies to ensure it couldn’t be copied, distributed, or accessed unauthorizedly.

However, as brands began to act more like media producers, the next generation of digital rights management arose. For marketing and advertising teams, rights management has become a complex strategy and process to ensure all the digital assets they create comply with usage rights, copyright regulations, and licensing agreements.

Just as DAM systems have needed to serve live content to audiences, DRM solutions have become more sophisticated and dynamic. Teams must manage usage rights, licensing agreements, regional availability, and other compliance standards in real-time.

Finally, as brands integrated easy access, availability, and display to digital assets along with structured rights management, a third element has entered the ecosystem. They now need to manage an expanded definition of digital assets against new brand compliance rules.

HANDPICKED RELATED CONTENT:

3. Automated content quality management

Most brands now employ content marketing as part of their marketing strategy and use numerous digital channels to interact with their customers. To meet the overwhelming demand for content, as CMI’s research has shown, the number of people responsible for creating brand content – internal teams, executives, agencies, freelancers, engaged influencers, and even customers – has increased exponentially.

As a result, managing content quality has become more complex. New solutions have emerged to help monitor and manage digital content, both before it is published and after it has reached its display destination for various audiences. These content quality management (CQM) solutions are still relatively niche, and most existing solutions monitor only essential digital asset quality and conformance to existing internal repositories of assets.

However, a rapidly growing segment of the CQM market is evolving to monitor rich media assets (i.e., video, audio, applications) against more sophisticated content-usage standards, such as resolution, aspect ratio, colors in images or video and usage violations, expirations, and royalties or other payment measurements.

Digital brand compliance – a new market

As these three content management practices evolve, they intersect to form a new solution category – digital brand compliance. It merges these modern technology systems to help content practitioners make sense of today’s challenges.

The digital brand compliance market combines digital asset management, digital rights systems, and content quality management systems, says @Robert Rose via @CMIContent.Click To Tweet

Digital brand compliance is a necessary new market for several reasons:

- Digital asset management systems have become more real-time systems of engagement.

- Digital rights systems have become more complex and part of an active brand management strategy.

- Content quality management systems have grown in complexity and popularity for myriad content marketing programs and extend to rich media monitoring across diverse third-party platforms.

To help you better understand the emergence of digital brand compliance, we did a deep dive into how these functions are coming together. CMI’s Market Guide: The Rise of Digital Brand Compliance, sponsored by FADEL, details the new but rapidly growing segment of managing and monitoring the compliance aspects of a media management strategy. You will find:

- More detailed overview of DAM, CRM, and CQM segments

- Exploration of why digital brand compliance is critical to business today

- Market definition of digital brand compliance

- Forecasted market growth of digital brand compliance solutions

- Predicted future trends of digital brand compliance as a holistic solution

Digital brand compliance is poised for tremendous growth in the next five years. Every company may not be a media company yet, but you almost certainly are adopting your operations to ensure your strategic media speaks just the way you want it to speak.

via Digital Brand Compliance: A New Responsibility of Content Marketing